Using python boto3 functions and lambda to send attachment to email

Table of contents

We have a requirement to send a CSV file present in the S3 bucket as an attachment to an email.

AWS Services Used

AWS S3 - For storing CSV file, which needs to be sent to the email

AWS IAM:- Creating roles, and permissions required for services to interact with each other to achieve the final requirement

AWS SES:- Amazon SES is a cloud email service provider that can integrate into any application for bulk email sending.

AWS Lambda:- AWS Lambda is a serverless compute service that runs your code in response to events and automatically manages the underlying compute resources for you

Three components comprise AWS Lambda

A function. This is the actual code that performs the task.

A configuration. This specifies how your function is executed.

An event source (optional). This is the event that triggers the function. You can trigger with several AWS services or a third-party service.

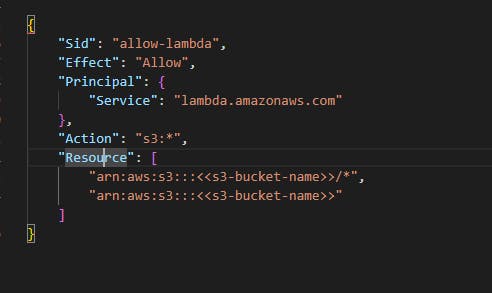

S3 bucket with required permissions include

above policy allows the role attached to lambda to have all permissions(allow) related to S3 for the bucket mentioned

above policy allows the lambda service to access this s3 bucket mentioned

PS:- You can always customize the IAM policies as per your requirement

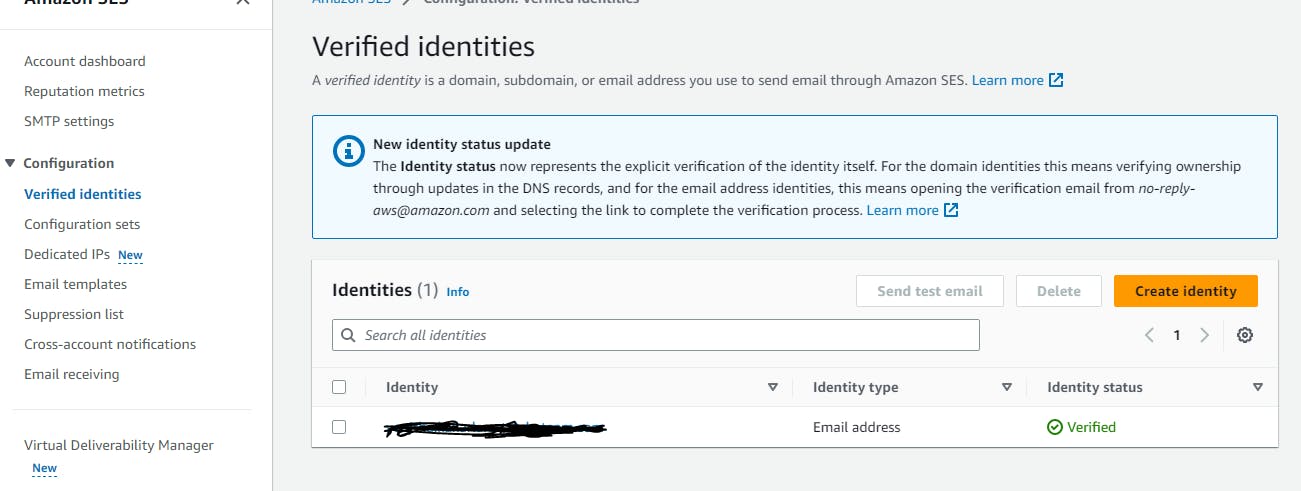

Add your email ID as verified identity in Amazon SES

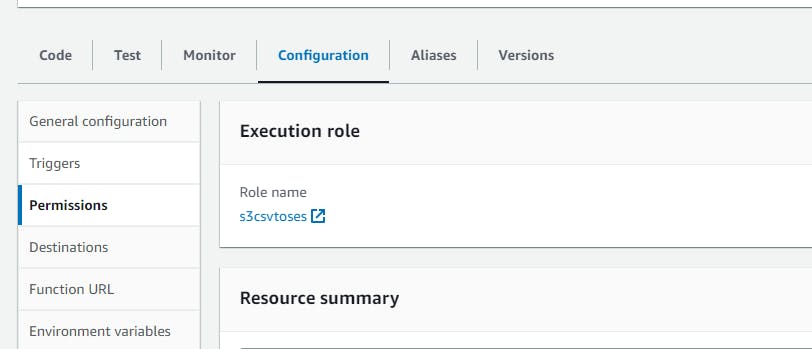

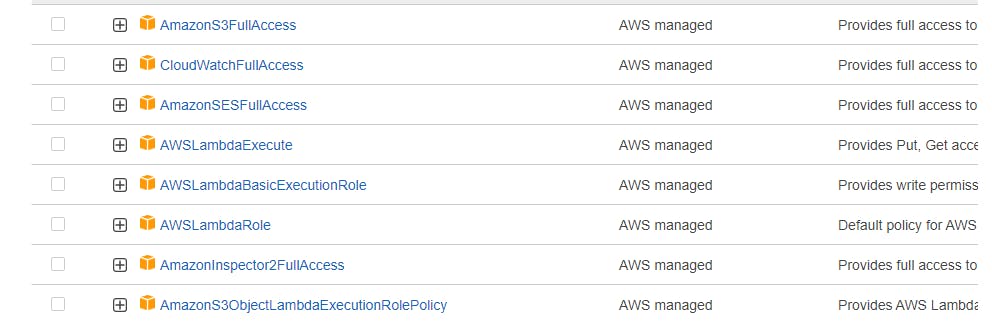

And for aws lambda execute permissions role, we have attached below aws managed roles, as mentioned already we can customize it according to our requirement

Write the below python code in the lambda function to execute and send an email accordingly

import os.path

import boto3

import email

from botocore.exceptions import ClientError

from email.mime.multipart import MIMEMultipart

from email.mime.text import MIMEText

from email.mime.application import MIMEApplication

s3 = boto3.client("s3")

def lambda_handler(event, context):

print(event)

SENDER = "<<emailID>>"

RECIPIENT = "<<emailID>>"

AWS_REGION = "<<aws-region>>"

SUBJECT = "Email From S3"

FILEOBJ = event["Records"][0]

BUCKET_NAME = str(FILEOBJ['s3']['bucket']['name'])

print(BUCKET_NAME)

KEY = str(FILEOBJ['s3']['object']['key'])

print(KEY)

FILE_NAME = os.path.basename(KEY)

print(FILE_NAME)

TMP_FILE_NAME = '/tmp/' + FILE_NAME

print(TMP_FILE_NAME)

s3.download_file(BUCKET_NAME, KEY, TMP_FILE_NAME)

#print(download)

ATTACHMENT = TMP_FILE_NAME

print("attachment" , ATTACHMENT)

BODY_TEXT = "The Object file was uploaded to S3"

client = boto3.client('ses',region_name=AWS_REGION)

msg = MIMEMultipart()

# Add subject, from and to lines.

msg['Subject'] = SUBJECT

msg['From'] = SENDER

msg['To'] = RECIPIENT

textpart = MIMEText(BODY_TEXT)

msg.attach(textpart)

att = MIMEApplication(open(ATTACHMENT, 'rb').read())

att.add_header('Content-Disposition','attachment',filename=ATTACHMENT)

msg.attach(att)

print(msg)

try:

response = client.send_raw_email(

Source=SENDER,

Destinations=['<<emailID>>'],

RawMessage={ 'Data':msg.as_string() }

)

except ClientError as e:

print(e.response['Error']['Message'])

else:

print("Email sent! Message ID:",response['MessageId'])

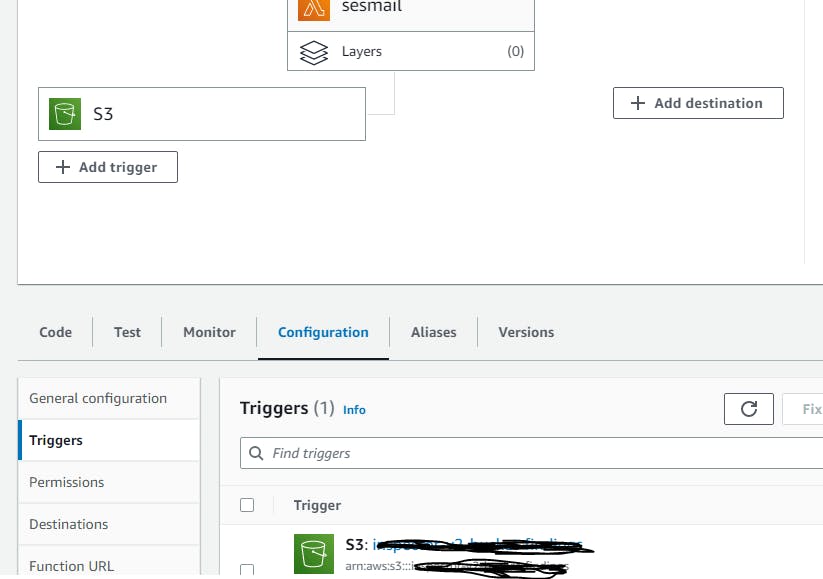

Attach your s3 bucket as trigger , so that whenever a new csv file is uploaded into s3 bucket , then the trigger gets initiated and lambda function is triggered

Optional:-

below is the test event JSON you might need to test your lambda

{

"Records": [

{

"eventVersion": "2.1",

"eventSource": "aws:s3",

"awsRegion": "us-west-2",

"eventTime": "1970-01-01T00:00:00.000Z",

"eventName": "ObjectCreated:Put",

"userIdentity": {

"principalId": "AIDAJDPLRKLG7UEXAMPLE"

},

"requestParameters": {

"sourceIPAddress": "127.0.0.1"

},

"responseElements": {

"x-amz-request-id": "C3D13FE58DE4C810",

"x-amz-id-2": "FMyUVURIY8/IgAtTv8xRjskZQpcIZ9KG4V5Wp6S7S/JRWeUWerMUE5JgHvANOjpD"

},

"s3": {

"s3SchemaVersion": "1.0",

"configurationId": "testConfigRule",

"bucket": {

"name": "<<bucketname>>",

"ownerIdentity": {

"principalId": "A3NL1KOZZKExample"

},

"arn": "arn:aws:s3:::<<bcuketname>>"

},

"object": {

"key": "<<filename>>.csv",

"size": 1024,

"eTag": "d41d8cd98f00b204e9800998ecf8427e",

"versionId": "096fKKXTRTtl3on89fVO.nfljtsv6qko",

"sequencer": "0055AED6DCD90281E5"

}

}

}

]

}

Below is a script to fetch inspector findings and write to CSV file and upload to s3 bucket

import json

import boto3

import boto3

import os

import uuid

import email

import csv

#message_text=[]

s3 = boto3.client('s3')

sns = boto3.client('sns')

def lambda_handler(event, context):

print(len(event['findings']))

message_text=[""]

temp_csv_file = csv.writer(open("/tmp/csv_file.csv", "w+"))

temp_csv_file.writerow(["DESCRIPTION", "REMEDIATION_SUGGESTED", "SEVERITY"])

for i in range(len(event['findings'])):

description = event['findings'][i]['description']

remediation = event['findings'][i]['remediation']['recommendation']

severity = event['findings'][i]['severity']

#print(description)

#print(remediation)

#print(severity)

#print("--------------------------------------------------------------------------------------------------------")

message_text_str = "DESCRIPTION {0} \n REMEDIATION SUGGESTED {1} \n SEVERITY {2}.\n -------------------------------------------------------------------------------------------------------------------------------------------".format(

str(event['findings'][i]['description']),

str(event['findings'][i]['remediation']['recommendation']),

str(event['findings'][i]['severity']))

print(message_text_str)

temp_csv_file.writerow([str(event['findings'][i]['description']),

str(event['findings'][i]['remediation']['recommendation']),

str(event['findings'][i]['severity'])])

message_text.append(message_text_str)

print(message_text)

s3.upload_file('/tmp/csv_file.csv', 'inspector-v2-bucket-findings' ,'final_report.csv')

msg = str("\n".join(message_text))

#response = sns.publish(

# TopicArn = "arn:aws:sns:eu-west-1:386288228052:inspector-v2",

# Message = msg,

# Subject = "AWS-Inspector findings",

# )

CONCLUSION:

In this way we can send attachments to email using aws lambda , we can automate it in our projects based on our requirements